HandyNet: A One-stop Solution to Detect, Segment, Localize & Analyze Driver Hands

Published in IEEE Conference on Computer Vision and Pattern Recognition - 3D HUMANS Workshop, 2018

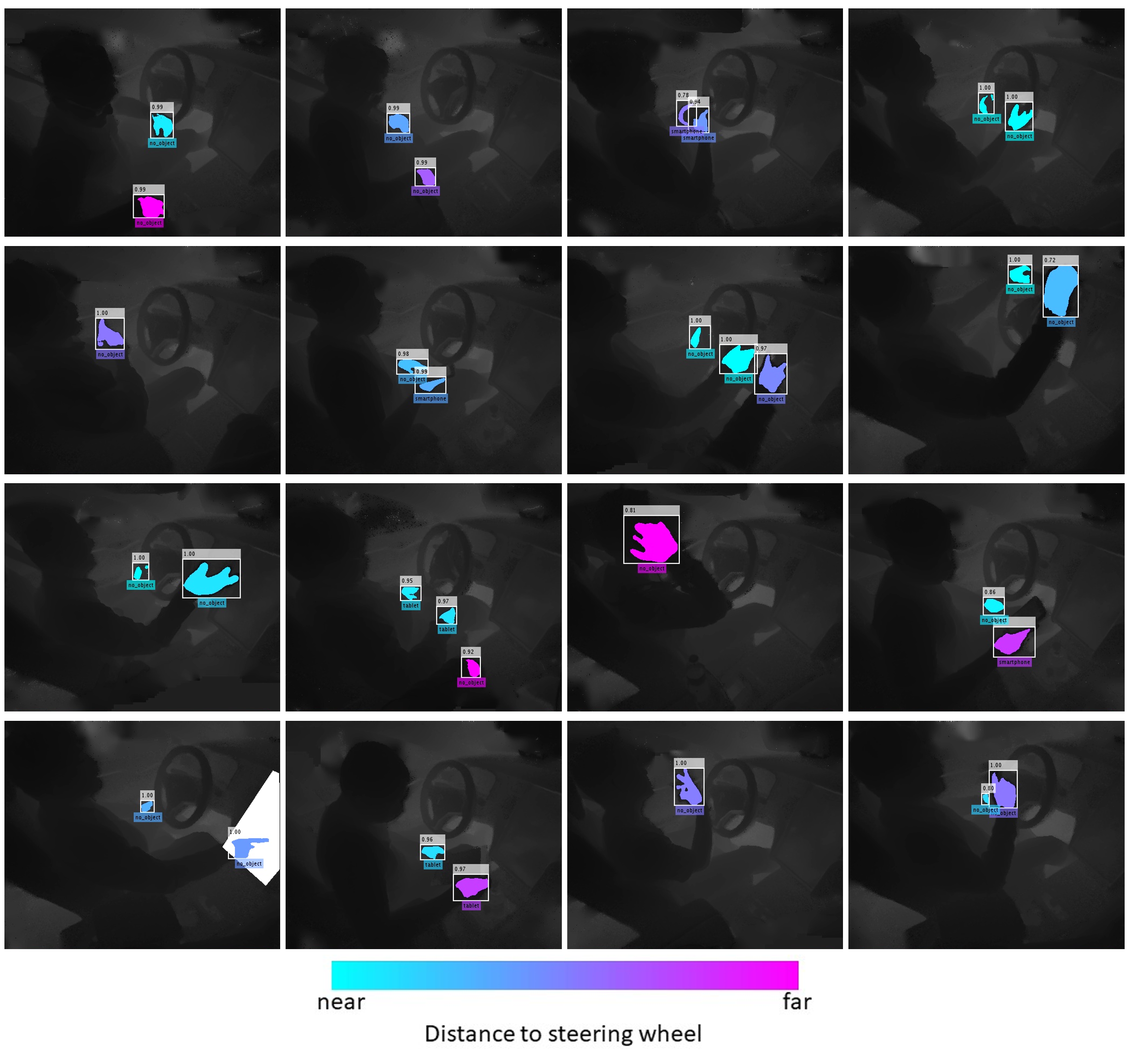

Tasks related to human hands have long been part of the computer vision community. Hands being the primary actuators for humans, convey a lot about activities and intents, in addition to being an alternative form of communication/interaction with other humans and machines. In this study, we focus on training a single feedforward convolutional neural network (CNN) capable of executing many hand related tasks that may be of use in autonomous and semi-autonomous vehicles of the future. The resulting network, which we refer to as HandyNet, is capable of detecting, segmenting and localizing (in 3D) driver hands inside a vehicle cabin. The network is additionally trained to identify handheld objects that the driver may be interacting with. To meet the data requirements to train such a network, we propose a method for cheap annotation based on chroma-keying, thereby bypassing weeks of human effort required to label such data. This process can generate thousands of labeled training samples in an efficient manner, and may be replicated in new environments with relative ease.